|

|

|

| P R I N T E R - F R I E N D L Y F O R M A T | Return to Article |

D-Lib Magazine

March/April 2014

Volume 20, Number 3/4

Report on the Capability Assessment and Improvement Workshop (CAIW) at iPres 2013

Christoph Becker

University of Toronto and Vienna University of Technology

christoph.becker@utoronto.ca

Elsa Cardoso

University Institute of Lisbon and INESC-ID

elsa.cardoso@iscte.pt

doi:10.1045/march2014-becker

Abstract

While Digital Preservation is making progress in terms of tool development, progressive establishment of standards and increasing activity in user communities, there is a lack of approaches to systematically assessing, comparing and improving how organizations go about achieving their preservation goals. This currently presents a challenge to many organizations for whom digital stewardship is a concern and reveals a substantial gap between theory and practice. To provide an interactive, focused venue for those interested in systematic approaches for assessment and improvement, we organized the first Capability Assessment and Improvement Workshop (CAIW) in Lisbon, on September 5, 2013, as part of the 10th International Conference on Digital Preservation (iPres 2013). This article provides a report on the issues discussed and attempts to synthesize the main conclusions with the intention of stimulating further discussion in the community on this topic.

Introduction

Digital Preservation (DP) is making progress in terms of tool development, progressive establishment of standards and increasing activity in user communities. However, there have been few attempts to systematically assess, compare and improve how organizations go about achieving their preservation goals. There is little systematic, well-founded work on assessing organizational processes and capabilities in a transparent, comparable way. To provide an interactive, focused venue bringing together those responsible for repository operations, management, and governance with those interested in developing and evaluating systematic approaches for assessment, we have organized the first Capability Assessment and Improvement Workshop (CAIW) [1] in Lisbon, on September 5, 2013, as part of the 10th International Conference on Digital Preservation (iPres 2013) [2]. This article provides a report on the issues discussed and attempts to summarize the main conclusions, with the intention of stimulating further discussion in the community on a topic that currently reveals a gap between theory and practice and a lack of robust, well-founded and practically tested approaches.

Overview

The CAIW workshop was organized by the team of the BenchmarkDP project [3], a 3-year research project funded by the Vienna Science and Technology Fund. The project is focused on developing a systematic approach to assess and compare digital preservation processes, systems, and organizational capabilities. As an important step, we invited participants from the diverse spectrum of preservation stakeholders to discuss the current state of assessment and improvement in the domain, share their experiences in developing, applying and evaluating approaches, and highlight the specific practitioners' goals and challenges for research and practice that lie ahead.

The workshop combined peer-reviewed workshop paper presentations with a discussion venue, with the goal of gathering an initial critical mass of stakeholders actively interested in further discussions. We asked for contributions on systematic assessment and improvement of Digital Preservation activities, processes and systems in organizational real-world settings. We encouraged both practitioners' views on their experiences, challenges and scenarios, as well as researchers' views on the development, evaluation and improvement of systematic approaches. We were thrilled to see that the call for papers resulted in a set of high-quality papers, which underwent a full review by the international program committee. The committee recommended 5 papers for acceptance. These were published online ahead of the workshop and presented by the authors, together with two additional presentations and discussion slots [1].

Twenty-two leading experts in digital preservation came to spend a much-too-short half day interacting on these topics. The audience was composed of experienced members from national archives and libraries, funding agencies, university libraries, digital preservation solution providers, and researchers in the area of preservation and information systems. Some of the motivations for joining this discussion, which the participants shared at the outset, are well worth sharing here:

- Funding agencies are interested in systematic assessment and improvement because they want to know what effect funding has had on organizations, with an eye on measuring the success of their funding activities.

- Practitioners are sometimes frustrated about the state of digital preservation and the lack of clarity on what the field as a community is aiming for and what its players as a stakeholder group are trying to achieve. This mirrors opinions voiced by several speakers at the 2012 workshop on Open Research Challenges in Digital Preservation held at IPRES who raised questions related to shifting values and perspectives and the evolving state of the preservation field [4] [5].

- Many researchers and practitioners see the need for community exchange around assessment and resources to provide guidance for audits and peer reviews. Several informal assessment methods are being piloted in organizations in North America and Australia, with the goal of benchmarking and comparing libraries and other content holders. The question arises: How to assess other repositories from outside, given how strikingly different their backgrounds, incentives and perspectives are?

- Commercial solution providers, on the other hand, are interested in creating new tools to support emerging capabilities and enable organizations to increase their capabilities.

Some of the questions that we set out to address in the workshop included the following:

- Why is it difficult to assess how an organization is performing in DP?

- What can we learn from existing approaches?

- What can we learn from assessment in neighboring fields?

- What methods and tools should we be striving to develop or adopt?

The workshop was structured in two major parts: In the first part, several talks presented experiences in designing, applying and evaluating assessment approaches in digital preservation and closely related domains. This was complemented by a presentation of initial results from a survey on preservation capability assessment previously conducted by the BenchmarkDP project. The second part of the workshop started with a reflection on the challenges in designing trustworthy archives based on the domain's reference models, and continued with providing a venue for specific goals and challenges as well as early perspectives on emerging approaches and frameworks that have the potential to advance the state of art and practice in our domain.

Part 1: Where are we, and where do we want to be?

Shadrack Katuu kicked off the presentation series by discussing the utility of maturity models as a management tool to enable the continuous improvement of specific aspects in an organization [6]. Maturity models are aligned with the Total Quality Management perspective of continuous improvement and have been used in different disciplines, including records and information management, business analytics, project management, energy management, etc. As a follow-up to a study of Enterprise Content Management (ECM) implementations in South Africa [7], his presentation focused on the ECM Maturity Model — ECM3 [8], an open-source framework designed to assess the maturity of organizations implementing ECM applications.

He presented initial results of a case study in which six organizations in South Africa performed an informal self-assessment exercise in a joint workshop setting using the ECM3. Findings point to the need to include other dimensions into the ECM3 (such as culture and change management), and to further explore the relation with long term preservation of digital records. As stated, "considering that ECM applications are not ideal to address long term preservation concerns, ECM3 should not be expected to serve as a digital preservation maturity model." [6]

The fact that organizations were often surprised by the assessment results resonated with the workshop participants, of whom several reported similar experiences in organizations in North America and Australia. Since informal approaches tend to be more subjective, a critical reflection period is crucial to ensure some balancing between different organizations. While this does not make the results objective, it does help to reduce some of the bias that inevitably arises from the subjective elements in the models.

A different perspective was adopted by Andrea Goethals of the Harvard University Library, who described the experience of using the National Digital Stewardship Alliance (NDSA) Levels of Digital Preservation [9] for a self-assessment exercise [10]. In the course of the work on the digital preservation services roadmap, Harvard University Library started a set of self-assessments with a focus on low-effort self-assessment approaches, mandated by time and resource constraints. The purpose of the self-assessment was not to achieve certification or proof of trustworthiness, but rather to identify key DP needs at the university as well as existent gaps in the organizational and technological infrastructure and resource framework.

The NDSA levels of DP are a set of simple and practical guidelines developed by NDSA that can be used by DP beginners as well as experienced organizations aiming to improve their DP activities. These guidelines are organized into five key functional areas of DP systems: storage and geographic location; file fixity and data integrity; information security; metadata; and file formats. The focused self-assessment using the Levels of DP was performed in approximately two hours by the repository manager, who was already deeply familiar with the Library's preservation repository technical infrastructure practices and one of the co-authors of the Levels of DP.

While the workshop presentation focused only on the use of the NDSA levels of DP, the exercise as a whole included the use of three other tools. The overall DP program was assessed using the Five Organizational Stages of Digital Preservation [11] and the Survey of Institutional Readiness [12]. The Library's preservation repository was assessed using Tessella's Digital Archiving Maturity Model [13] and the NDSA Levels of DP.

Andrea Goethals suggested four key dimensions to distinguish assessment models:

- What is being assessed? A program, a repository, an initiative?

- How much effort is the assessment? Many organizations have limited resources for such assessments and hence prefer approaches that can be applied easily and show quick benefits.

- How prescriptive is the model? Some approaches, such as TRAC, will not identify avenues for improvement, while the NDSA levels are designed to emphasize improvement opportunities.

- What is the result? While some approaches are aimed at external auditing and certification, others are geared towards informal self-diagnosis.

An interesting observation was that the improvements identified by the various approaches showed minimal overlap. The self-assessment identified five items needing improvement, of which only one was also identified by the other self-assessments. Similarly, other assessment efforts identified gap areas not identified by the Levels of DP. This led to the conclusion that applying a variety of assessment and data collection methods can be useful to construct a more comprehensive picture of the needs and gap areas that need to be addressed [10].

A crucial aspect that provided a common thread throughout these and the following discussions was the challenge of striking a balance between highly informal, very efficient, but not necessarily objective approaches and overly rigid, highly effort intense and complex assessment models. While the discussion did not point clearly to a sweet spot, it is apparent that many members of the community are interested in finding the appropriate balance in a variety of institutional settings.

This observation was also reflected in some of the key findings presented next by Elsa Cardoso, who reported on preliminary insights from a survey conducted by BenchmarkDP on the very topic of the workshop [14]. The profile of survey respondents consisted of experienced and active people in DP (more than 50% having five or more years of experience in this subject) from 17 countries (four countries were highly represented in the sample: the UK, US, Germany, and Portugal). Most of these were memory institutions/content holders (34%) and universities (50%) that had responsibility for safeguarding a wide variety of content. The sample size consisted of 38 valid answers. The survey revealed that organizations are currently aware of the need for a tool to assess and improve DP processes: 79% of respondents answered they need such a tool and found it very important (50%) or important (37%) as a means to provide a roadmap for process improvement.

When asked about the current state of affairs regarding the assessment and improvement of DP activities, the majority of the respondents were performing it either in an ad hoc manner (71%) or using internal audits (53%). The answers showed a real interest of the community in the development of a systematic tool and approach to assess and improve DP activities. While there are several assessment, audit and certification tools for digital preservation, there seems to be insufficient work on assessment that is well founded, systematic, tested and practically useful for the community. Almost half of the organizations that answered the survey (47%) are not currently using any tool. Others reported using TRAC/ISO 16363 (26%), the Data Seal of Approval (18%) or the DRAMBORA tool (16%). Other tools mentioned included the NDSA Levels of Digital Preservation and the Nestor Criteria for Trusted Digital Repositories (DIN 31644).

The survey investigated requirements for a DP assessment and improvement tool from a practitioner's perspective. The answers indicated that the members of the community put a strong emphasis on the practicality of such a tool and its entry barrier, expecting tools that are easy to implement, aligned with existing experience, and easy to use, adopt, and adapt. The major organizational obstacles to a successful implementation of such a tool, as provided by respondents, are the degree of adoption of the tool (45%) and organizational barriers such as competing priorities and lack of specialized resources. This of course points to a chicken-and-egg problem not uncommon in this field: Who will be the first to adopt new tools?

Given the importance of the subject of maturity models for process assessment, the survey also investigated the community interest in and knowledge of this concept. More than half of the survey respondents responded they already knew how maturity models can be used in process assessment (53%). Among the sample of respondents that are unfamiliar with maturity models (18 out of 38), the interest in knowing more was very high, with 87% stating that they would like to know more about maturity models. This indicates that the community is open to adopting, learning and sharing experiences.

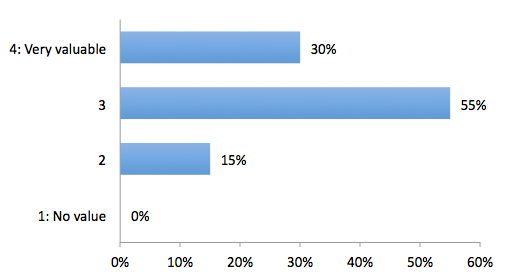

The survey further asked the respondents who were familiar with maturity models (20 out of 38) about the value they would assign to a maturity model in assessment and improvement, using a 4-point scale. As displayed in Figure 1, all see some value, and the majority of respondents perceived maturity models as a valuable instrument.

Figure 1: Recognized value of a maturity model in assessment and improvement of DP [14]

Part 2: Goals and Challenges

The second part of the workshop was kicked off by Artur Caetano. Taking a very different perspective, he discussed the challenges in developing methods and the contradicting requirements often posed. The ideal method is both robust and flexible yet situated, easy to apply yet powerful. How can we develop approaches that accommodate such diverse needs and enable flexible reusability of approaches across different contexts?

His thought-provoking presentation on Situational Method Engineering (SME) discussed the benefits of this research discipline to address the challenges of defining a capability assessment method for digital preservation [15]. Method Engineering is the engineering discipline dedicated to the design, construction, adaptation, and evaluation of methods, techniques and tools for the development of information systems [16].

The rationale for using an SME approach is to achieve flexibility. The method engineering approach advocates that "no specific methodology can solve enough problems and, therefore, methodologies must be specifically created for a particular set of requirements" [17]. SME uses the principles of modularity and reuse, assembling coherent pieces of ISD methods [18] (called method fragments) into situational methods. SME needs to be supported by a repository or Method Library that stores method fragments, but also with a configuration process to guide the selection of method fragments and the assembly of situational methods.

This new perspective was welcomed in the discussion, which at the same time highlighted the challenges of developing an appropriate framework and the difficulties of establishing a solid knowledge base within the community that can serve as a starting point for developing method fragments and methods.

Hannes Kulovits, head of the Digital Archive of the Austrian State Archives, presented a critical view on existing approaches to design archival systems and DP initiatives, discussing the OAIS (Open Archival Information System) reference model and ISO 16363 Audit and certification of trustworthy digital repositories from a practitioner's perspective. Kulovits discussed the approach followed by the Austrian State Archives since 2007/2008 involving the deployment of a system to store and preserve electronic records created by the federal government and administration of Austria [19]. A key aspect of this project was the decision to outsource information technology operations of hardware and software, which demanded a clear definition of requirements and responsibilities.

He noted the pervasive but incorrect impression that the OAIS is a design that could be implemented, and discussed the challenges in deriving detailed specifications from high-level models and the tendency to describe solutions instead of needs. Some of these issues point to practical gaps in the application of requirements analysis and systems design techniques. However, they also point towards requirements for a more systematic approach for the assessment and improvement of DP activities, processes and systems. The set of requirements he proposed comprise the following aspects:

- An adherence to existing standards;

- A clear distinction between business process and information system;

- An explicit focus on "what" instead of "how";

- An explicit recognition of required organizational skills and expertise;

- The specification of legitimate evidence;

- The adoption of best practices in specific areas required for successful DP;

- Support for an experience base; and

- Enabling communication to management, customers and stakeholders [19].

Relating to previous points about the sweet spot in assessment methods, these requirements clearly should be in the mind of designers of assessment approaches. It was very interesting to see, from this perspective, two more formal approaches to process assessment closing the day.

One was presented by Lucas Colet of the Public Research Centre Henri Tudor in Luxemburg. His process assessment method for digital record preservation and information security [20] is based on the process assessment standard ISO/IEC 15504-2 [21]. The work is motivated by the legal framework in Luxemburg regarding digital records and a set of technical regulation requirements and measures for certifying Digitalization or Archiving Service Providers (PSDC) [22]. This new technical regulation is based on information security standards. The PSDC regulations provide only a list of requirements, and no guidelines are provided to help organizations implement those requirements. The presented process assessment method was developed to support organizations, mainly small and medium enterprises, in the implementation of digital records preservation and information security. Moreover, it aimed at increasing the adoption of this new technical regulation by adding requirements for records management and DP. The proposed process assessment method aims to integrate best practices in the areas of records management (ISO 15489-1), digital preservation (OAIS), digitization (ISO/TR 13028), and information security (PSDC, ISO/IEC 27001, ISO/IEC 27002). The process assessment method uses a combination of three tools differing in complexity. The TIPA® Assessment for ERM is based on the TIPA® (Tudor IT Process Assessment) framework [23], an assessment method based on ISO/IEC 15504-2. The Gap Analysis is a questionnaire with 340 questions and much less demanding in terms of resources. A "Lite Assessment" method based on TIPA is also introduced, recognizing the costs of a full TIPA assessment may be prohibitive for many organizations.

Finally, Diogo Proenca, a PhD researcher in Lisbon, presented an early perspective of applying Situational Method Engineering to the domain of capability assessment in Digital Preservation, aiming for a flexible, extensible framework that can be used as the basis for developing situated assessment methods. The core elements of the approach are built on the concepts of Situational Method Engineering and attempt to separate the distinct aspects of assessment, so that these decoupled modules can be used more independently from each other [24].

Summary

The topic of the workshop drew much more interest than anticipated, and in particular revealed an astonishing density and variety of observations, perspectives, approaches and challenges. The workshop itself would have benefited much from being extended to a full day, which unfortunately was not feasible. The workshop discussions, however, continued in an even friendlier environment over dinner.

The discussions demonstrated strong interest in the community in new approaches, provided inspiring motivations for future work, and encouraged continued engagement across the community's stakeholders. They also highlighted several crucial challenges. Among these, we observe most importantly the need to strike an appropriate balance between simplicity and ease of use on the one hand and expressive, trustworthy, meaningful results on the other hand.

The location of the sweet spot will depend on several factors that vary across organizations, domains, and scenarios. Understanding these factors will be an important step towards an approach that unites flexibility, robustness, ease of use and value in an open approach that is accessible and useful for the increasing diversity of organizations for whom digital stewardship is a concern.

Acknowledgements

Part of this work was supported by the Vienna Science and Technology Fund (WWTF) through the project BenchmarkDP (ICT12-046).

References

[2] The 10th International Conference on Digital Preservation (iPres 2013) website.

[3] BenchmarkDP website.

[4] McKinney, P., Benson, L., Knight, S. (2012). From Hobbyist to Industrialist. Challenging the DP Community. In: Open Research Challenges workshop at IPRES 2012.

[5] Borbinha, J. (2012). The value dimensions of Digital Preservation. In: Open Research Challenges workshop at IPRES 2012.

[6] Katuu, S. (2013) The Utility of Maturity Models — The ECM Maturity Model within a South African context. In: Capability assessment and improvement workshop (CAIW) at IPRES 2013, Lisbon, Portugal.

[7] Katuu, S. (2012) Enterprise Content Management (ECM) implementation in South Africa. Records Management Journal, 22, 1, 37-56. http://doi.org/10.1108/09565691211222081

[8] Pelz-Sharpe, A., Durga, A., Smigiel, D., Hartman, E., Byrne, T. and Gingras, J. (2010) ECM 3 — ECM maturity model.

[9] Phillips, M., Bailey, J., Goethals, A. & Owens, T. (2013). The NDSA Levels of Digital Preservation: An Explanation and Uses. IS&T Archiving, Washington, USA.

[10] Goethals, A. (2013) An Example Self-Assessment Using the NDSA Levels of Digital Preservation. In: Capability assessment and improvement workshop (CAIW) at IPRES 2013, Lisbon, Portugal.

[11] Kenney, A. and McGovern, N. 2003. The Five Organizational Stages of Digital Preservation. In Digital Libraries: A Vision for the Twenty First Century, a festschrift to honor Wendy Lougee. http://hdl.handle.net/2027/spo.bbv9812.0001.001

[12] Kenney, A. and McGovern, N. Institutional Readiness Survey. Digital Preservation Management Tutorial.

[13] Tessella. 2012. Digital Archiving Maturity Model.

[14] Cardoso, E. (2013) Preliminary results of the survey on Capability Assessment and Improvement. In: Capability assessment and improvement workshop (CAIW) at IPRES 2013, Lisbon, Portugal.

[15] Caetano, A. (2013) Situational Method Engineering and Capability Assessment. In: Capability assessment and improvement workshop (CAIW) at IPRES 2013, Lisbon, Portugal.

[16] Brinkkemper, S. (1996) Method Engineering: engineering of information system development methods and tools. Information and Software Technology, 38, pp.275-280. http://doi.org/10.1016/0950-5849(95)01059-9

[17] Rolland, C. (2007) Situational Method Engineering: Fundamentals and Experiences. In IFIP International Federation for Information Processing, Volume 244, pp.6. Ralyté, J., Brinkkemper, S., and Henderson-Sellers (Eds), Boston, Springer.

[18] Gonzalez-Perez, C. (2007) Supporting Situational Method Engineering with ISO/IEC 24744 and the Work Product Tool Approach. In IFIP International Federation for Information Processing, Volume 244, pp.7-18. Ralyté, J., Brinkkemper, S., and Henderson-Sellers (Eds), Boston, Springer.

[19] Kulovits, H. (2013) A perspective of the Austrian State Archives on the systematic assessment and improvement of Digital Preservation activities, processes and systems. In: Capability assessment and improvement workshop (CAIW) at IPRES 2013, Lisbon, Portugal.

[20] Colet, L. and Renault, A. (2013) Process Assessment for Digital Records Preservation and Information Security. In: Capability assessment and improvement workshop (CAIW) at IPRES 2013, Lisbon, Portugal.

[21] International Organization for Standardization. ISO/IEC 15504-2:2003: Information Technology — process assessment — part 2: Performing an assessment.

[22] ILNAS, Institut Luxembourgeois de la Normalisation, de l'Accréditation, de la Sécurité et qualité des produits et services. (2013) Technical regulation requirements and measures for certifying Digitalization or Archiving Service Providers (PSDC).

[23] Barafort, B., Betry, V., Cortina, S., Picard, M., St Jean, M., Renault, A., Valdés, O., and Tudor (2009) ITSM Process Assessment Supporting ITIL: Using TIPA to Access and Improve your Processes with ISO 15504 and Prepare for ISO 20000 Certification. Van Haren Publications, Zaltbommel, Netherlands.

[24] Proenca, D. (2013). A systematic approach for the assessment of digital preservation activities. In: Capability assessment and improvement workshop (CAIW) at IPRES 2013, Lisbon, Portugal.

About the Authors

|

Christoph Becker is Assistant Professor at the Faculty of Information, University of Toronto and Associate Director of the Digital Curation Institute at the Faculty of Information, as well as Senior Scientist at the Software and Information Engineering Group, Vienna University of Technology. His research focuses on digital curation and issues of longevity in information systems, combining perspectives of systems and software engineering, information management, digital preservation, decision analysis, and enterprise governance of IT. Find out more at his websites: http://ischool.utoronto.ca/christoph-becker and http://www.ifs.tuwien.ac.at/~becker/. |

|

Elsa Cardoso is an Assistant Professor at the Department of Information Science and Technology at ISCTE — University Institute of Lisbon (ISCTE-IUL) and an invited researcher at the Information Systems Group, INESC-ID. Her research interests include business intelligence and data warehousing, performance management, balanced scorecard and business process management. Find out more at her website: http://home.iscte-iul.pt/~earc/. |

|

|

|

| P R I N T E R - F R I E N D L Y F O R M A T | Return to Article |